6 VR Design Principles for Hand Tracking

Posted; September 20, 2021

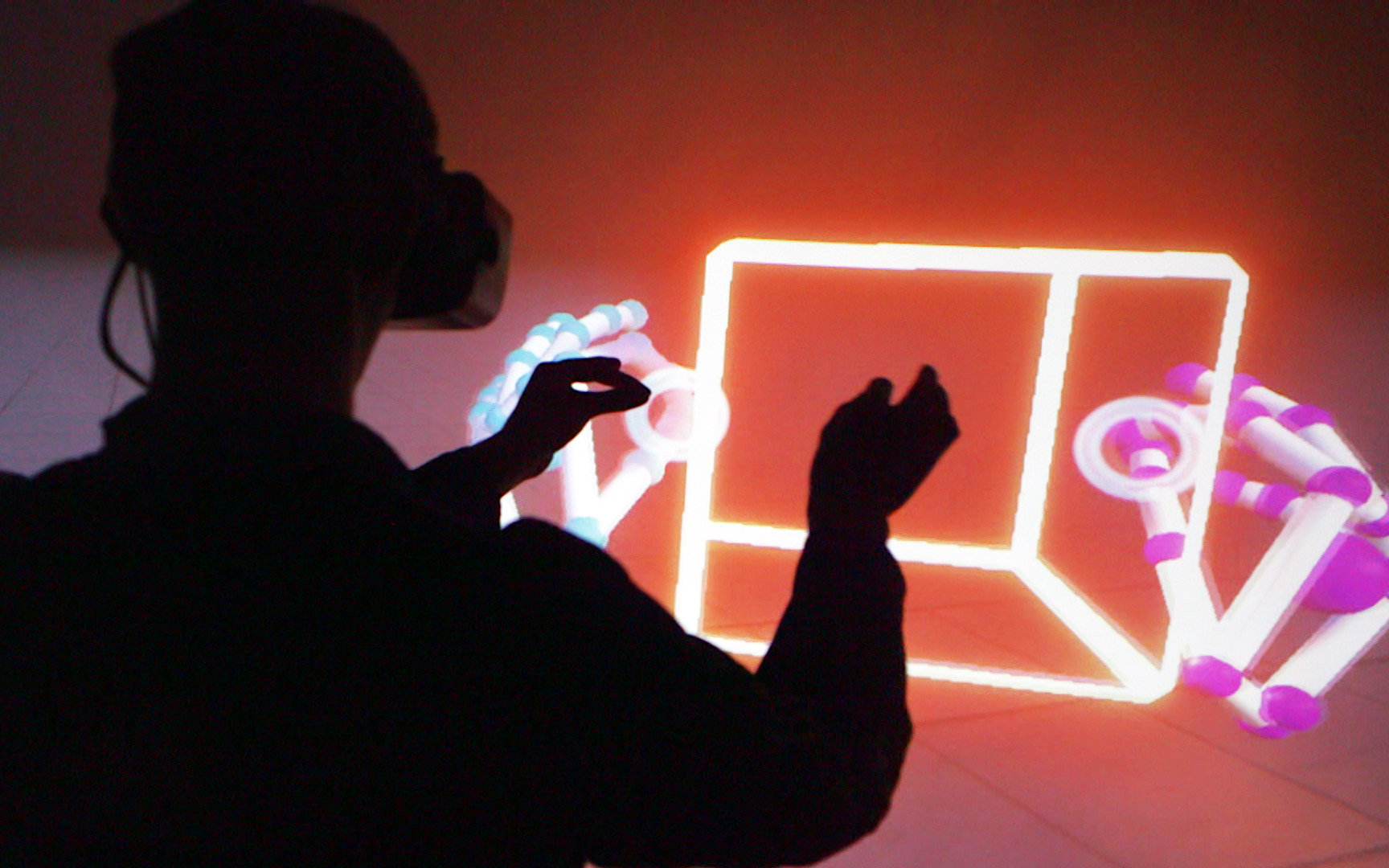

In virtual reality, hand tracking unlocks experiences that are more intuitive and realistic. VR interfaces optimized for hand tracking feature a few key differences to those designed solely for controllers. Here are six of the key hand tracking design principles we’ve evolved at Ultraleap.

By Matt Corrall, Design Director at Ultraleap

Mainstream adoption of VR means broadening appeal beyond early adopters. It means drawing in more diverse, technology-anxious, or even downright sceptical users. And in enterprise use-cases, it means developing applications that deliver measurable real-world impact.

Making how we interact in VR more intuitive and realistic is an important part of solving the puzzle of mainstream adoption. Allowing users to use their hands more naturally is central to that.

But where do you start with designing a hand tracking experience?

We’ve distilled our many years of designing hand tracking VR experiences into six basic principles. Designing a VR experience optimized for hand tracking isn’t rocket science, but it is a little bit different to designing solely for controllers.

By following a few simple hand tracking principles, you can get the most out of hand tracking – quickly and easily. Read on to find out more…

1. Make everything tangible and physical

When virtual objects around you behave much like in real life, interactions feel natural. This negates the need to learn button presses.

Make every element – even UI controls like buttons and menus – three-dimensional and behave with familiar physics as much as possible. Virtual objects work best when their form presents clear affordances to users – that is, the way they look helps make it obvious how to use them without the need for instructions.

They should be the right size to be picked up, and if appropriate have their own handles, surface textures or indents to show how they should be grabbed, slid or rotated.

How users handle an object will also vary depending on who they are, and what they’re doing in the application. Rather than assigning specific gestures like “pinch” to the object, use our Interaction Engine to allow users to grab, push, and pull with any or all of their fingers.

2. Keep physical exertion minimal

Users should be able to reach everything they need to comfortably. They shouldn’t have to stretch, regularly raise their arms high or perform fatiguing movements. Consider positioning and how every object could be used, avoiding uncomfortable hand poses and repetitive motions.

3. Use abstract hand poses and gestures sparingly

Performing abstract hand poses or gestures (such as turning over a hand or pinching two fingers together) can be a convenient way to teleport or open a menu. However, they are difficult to recall unless people use the application regularly, and should be used sparingly.

Instead, build features through direct interactions with objects and the virtual environment wherever possible.

4. Ensure a wide range of people can enjoy your VR application

Remember that comfort and what feels natural is different for different people. There is also a wide range of hand sizes and shapes – and the ways people interact is a bit less predictable than with VR controllers.

Always test your application with a broad, diverse range of users mid-development. Build in options for users to adjust things like panel positions.

We recommend a small “handle” below the panel that users can quickly grab to reposition panels without any chance of interference with other controls.

5. Make elements respond to proximity

Many hand tracking VR experiences don’t include mid-air haptic feedback. If yours doesn’t, users will need extra visual feedback from objects in order to interact with confidence. Interactive elements should react to approaching fingers with a small movement or colour change to indicate they can be used.

6. ...and then make elements respond to touch

When “touched” by the hand or any number of fingers, virtual objects should respond with another colour change or movement. Audio feedback further helps to indicate say, a successful button press.

Take a deep dive into designing for hand tracking in VR in our full design guidelines

Now check out how we've put some of these design principles into practice at Ultraleap.

Buttons and panels

We’ve found that when using hand tracking, UI panels – often flat in VR – benefit from feeling more “mechanical”. This makes it obvious how to use them without the need for instructions.

In these examples, the components are raised slightly from the panel surface with a shadow that makes elevation clear. They also move when pushed, just like a mechanical button would.

You can also add an audible “click” at the point of activation – additional feedback that gives users yet more confidence they’ve used it successfully.

XR keyboards

We recently developed an open-source XR keyboard for Unity that implements many of these principles.

Users need confidence they’re pushing the correct key. We made the buttons react to proximity and touch with state changes, and make an audible click when pressed.

The keyboard is small enough that users can keep it all clearly in their field of view and use it without turning their head. Instead of adding keypads and number rows, extra characters can be accessed by holding down on a key to activate a little pop-up.

Finally, it was repositionable so everyone can use it comfortably.

Micro handles

The spherical micro handles in our Cat Explorer demo can be used to reposition panels and keyboards, or control sliding panels or pull-cord controls.

As with other objects, they communicate affordance – in this case, they look the right size to be pinched with two fingers. They react to proximity with a small, snapping movement towards the hand, and give audio feedback upon use.

Try our Cat Explorer demo >

Hand menus

These menus are particularly well-suited to applications where users move around a lot. We used just three or four buttons and reserved them for the features users need most.

As with other buttons on UI panels, they appear as three-dimensional objects which respond to proximity and touch with clear visual and audio feedback.

Use our Interaction Engine to build hand menus quickly >

Try hand menus in our Blocks and Paint demos >

Matt Corrall is Design Director at Ultraleap.

Explore full VR design guidelines

Related articles

Explore our blogs, whitepapers and case studies to find out more.